Navigating AI Convergence in Human–Artificial Intelligence Teams: A Signaling Theory Approach

Andria Smith and Hunter Phoenix van Wagoner share the first authorship.

Funding: This work was supported by Joachim Herz Stiftung and Max Planck Society.

ABSTRACT

Teams that combine human intelligence with artificial intelligence (AI) have become indispensable for solving complex tasks in various decision-making contexts in modern organizations. However, the factors that contribute to AI convergence, where human team members align their decisions with those of their AI counterparts, still remain unclear. This study integrates signaling theory with self-determination theory to investigate how specific signals—such as signal fit, optional AI advice, and signal set congruence—affect employees' AI convergence in human–AI teams. Based on four experimental studies conducted in facial recognition and hiring contexts with approximately 1100 participants, the findings highlight the significant positive impact of congruent signals from both human and AI team members on AI convergence. Moreover, providing an option for employees to solicit AI advice also enhances AI convergence; when AI signals are chosen by employees rather than forced upon them, participants are more likely to accept AI advice. This research advances knowledge on human–AI teaming by (1) expanding signaling theory into the human–AI team context; (2) developing a deeper understanding of AI convergence and its drivers in human–AI teams; (3) providing actionable insights for designing teams and tasks to optimize decision-making in high-stakes, uncertain environments; and (4) introducing facial recognition as an innovative context for human–AI teaming.

1 Introduction

In a transformative era of artificial intelligence (AI) integration, studying AI convergence, where human team members align their decisions with those of their AI counterparts, can be of immense benefit to organizations. AI systems have emerged as accelerators in the workplace, offering exceptional convenience and transforming the way complex tasks are performed and the speed at which decisions are made (Howard, Rabbitt, and Sirotin 2020). Advancements in AI have reshaped the status quo across various contexts, from monitoring remote work environments and making decisions in criminal justice and finance to influencing healthcare outcomes and processes for hiring and promoting employees (Kaushik 2022). Today, AI systems are increasingly seen as collaborative teammates for human workers, with human–AI teaming focusing on interactions where both work together to solve collaborative tasks and achieve shared goals (Zhao, Simmons, and Admoni 2022). Understanding when, how, and why human–AI teams converge in solving complex tasks under high uncertainty is crucial for promoting effective decision-making processes that satisfy the needs for autonomy and competence of human employees (Gagné et al. 2022; Vaccaro, Almaatouq, and Malone 2024).

Human–AI teams, in which the AI system augments one or more employees, are meant to synthesize the best of human intelligence and AI (Bansal et al. 2021). However, beneath their apparent technological prowess lies a complex challenge—the interplay between AI advice and the factors that influence the acceptance or rejection of such advice. Applying signaling theory (Connelly et al. 2011; Drover, Wood, and Corbett 2018) to the context of human–AI teaming and integrating it with self-determination theory (Deci and Ryan 1985), we investigate AI convergence across different domains that directly impact people's work, safety, and careers. Specifically, we focus on domains characterized by high uncertainty and high importance, such as facial recognition and hiring, highlighting the responsibility of human leaders for decisions made by human–AI teams.

Human–AI teams make for a promising application of signaling theory for three reasons. First, in human–AI teams, AI advice is derived from processing vast amounts of data and performing complex analyses, often beyond employees' comprehension (Mosqueira-Rey et al. 2022). Second, AI acts as a signal sender to human teammates, with traits based on data-driven models that may contain unnoticed imperfections (Ali et al. 2023). Third, signaling theory offers a framework to interpret both AI signals and human signals, as well as the congruence between them in different contexts.

Our research explores how three key aspects of signaling theory—signal fit, optional AI advice, and congruence between human and AI signals—affect AI convergence in human–AI teams. We designed a series of experiments involving either a facial recognition task (Studies 1–3) or a CV screening task (Study 4) to manipulate signal fit (varying information about the credibility of AI advice), optional versus mandatory AI advice, and congruent versus incongruent signals from both human and AI senders. Across four experimental studies involving over 1100 participants, we tested our hypotheses to shed light on the main factors influencing AI convergence and provide actionable insights for organizations aiming to foster effective human–AI teams in the workplace.

Our study contributes to existing knowledge in four ways. First, we extend signaling theory by integrating it with self-determination theory and applying this integrated framework to the context of human–AI teams. By examining specific signal characteristics (e.g., observability and fit), multiple signal sources (human and AI), and various signal sets (congruent and incongruent), we uncover novel theoretical mechanisms that determine AI convergence within teams comprising both human and AI members. In addition, we apply signaling theory within teams, demonstrating how signals from both human and AI team members can impact decision-making processes internally. Although most studies apply signals as a method to communicate to outsiders, our research illustrates that signaling theory can also explain the behavior of internal, and not solely external, stakeholders. These insights can inform further theoretical development on optimizing team composition comprising multiple humans and AI and on understanding convergence among diverse signal sources.

Second, we follow recent calls to develop a better understanding of human–AI collaboration in organizational settings (Köchling and Wehner 2020; Raisch and Krakowski 2021; Vaccaro, Almaatouq, and Malone 2024). We contribute to the literature by developing a deeper understanding of AI convergence in human–AI teams and the factors influencing it, aiming to empower individuals to leverage AI's capabilities while fulfilling employees' need for autonomy and competence and preserving agency in decision-making processes. Our results lend weight to the augmentation thesis favoring a collaborative model of AI configuration in which humans and AI influence each other in iterative processes (Murray, Rhymer, and Sirmon 2021; Raisch and Krakowski 2021).

Third, our research contributes to a more comprehensive empirical understanding of human–AI teaming by developing impactful, actionable recommendations aimed at navigating AI convergence. The integration of human–AI teams into decision-making processes within highly complex and uncertain environments may give rise to ethical and privacy concerns, influencing employees' acceptance of AI advice due to the potentially significant consequences of their decisions. Understanding these implications and applying careful team and task design can impact AI convergence and optimize decision-making in high-stakes environments.

Fourth, although human–AI teams in the context of hiring have been extensively studied by researchers (e.g., van den Broek, Sergeeva, and Huysman 2021), the application of human–AI teaming in the context of facial recognition represents a novel area for exploration within organizational settings. Introducing this novel context to the field of organizational behavior is important and interesting because it presents unique challenges and ethical considerations regarding privacy, bias mitigation, and the integration of AI technologies into sensitive operational domains.

2 Human–AI Teaming

The prevalence of employee interaction with AI is rapidly increasing, especially in modern workplaces. Today, AI tools transform organizational processes by supporting humans in executing managerial functions, trading on the stock market, and even making life-altering decisions, such as hiring and healthcare choices (Chugunova and Sele 2022; Gonzalez et al. 2022). Human–AI teaming involves humans and AI systems both collaborating and working interdependently to achieve shared objectives by leveraging their complementary strengths and weaknesses (Dellermann et al. 2019; Einola and Khoreva 2022; Hollenbeck, Beersma, and Schouten 2012; Memmert and Bittner 2022). Many AI systems are designed for collaborative settings, serving as advisory tools and forming human–AI teams. These systems are evolving beyond mere tools and are increasingly perceived as collaborative teammates, prompting the development of new forms of work and cooperation (Seeber et al. 2020; Wang, Maes, et al. 2021). Specifically, AI teammates are emerging as highly impactful contributors because of their advanced knowledge processing capabilities, sensing abilities, and natural language interaction with human teammates (Seeber et al. 2020).

Although research on human–AI teaming in organizational settings is scattered across various fields, such as computer science, economics, psychology, and organizational behavior (Đula et al. 2023; Vaccaro, Almaatouq, and Malone 2024), researchers agree that employees react differently to AI teammates compared with fellow human teammates. In particular, when interacting with AI teammates, individuals tend to show less regard to social norms, demonstrate less emotions, and act with increased rationality compared with human-to-human interactions (Castelo 2019; Chugunova and Sele 2022; Jago 2019). Employee's reactions to AI teammates' advice vary widely, ranging from algorithm aversion to algorithm appreciation. Algorithm aversion refers to the situation when human team members exhibit a reluctance to engage with algorithms, favoring human teammates instead, even if algorithms outperform humans (Dietvorst, Simmons, and Massey 2015; Jussupow, Benbasat, and Heinzl 2020). For example, Gonzalez et al. (2022) observed that job candidates feel less confident and in control when AI systems handle hiring decisions without human input, as it limits their ability to showcase skills. Similarly, a study by Newman, Fast, and Harmon (2020) found that humans possess the capability to consider individual circumstances and foundational factors impacting employees' performance in a more comprehensive manner compared to algorithms. Algorithm aversion in the workplace may arise from employee fears (Bankins et al. 2024; Tong et al. 2021) regarding (a) the risk of job loss and adverse career impact (Suseno et al. 2022), (b) skills becoming redundant due to automation risk (Innocenti and Golin 2022), and (c) substantial alterations in existing jobs due to technological advancements (Brougham and Haar 2020).

However, there is empirical evidence that employees may also be grateful for AI advice and may even prefer it compared to human advice—a phenomenon termed algorithm appreciation (Logg, Minson, and Moore 2019). For example, in regard to investment decisions, Keding and Meissner (2021) found that in objective task settings, managers saw AI advice as more valid than identical human suggestions. Algorithm appreciation arises from the belief that algorithms employ a more systematic decision-making process, enhancing decision quality (Bankins et al. 2024). Furthermore, although bias in AI exists (e.g., Buolamwini and Gebru 2018), a recent study suggests that women favor algorithmic recruiters over male recruiters because of the anticipation of more favorable assessments from AI (Pethig and Kroenung 2022).

Accordingly, we argue that synergies in human–AI teams are not guaranteed. In their recent review, Vaccaro, Almaatouq, and Malone (2024) illustrate this point by highlighting the importance of context and content in optimal human–AI teams. Previous research suggests that there are three main factors influencing the likelihood of AI convergence in human–AI teams: decision context, agency in decision-making, and individual differences (Bankins et al. 2024; Chugunova and Sele 2022). For example, although employees seem to be more receptive to accepting AI advice in analytical or objective contexts, they prefer human advice in social or moral settings because they perceive AI to be unable to account for the uniqueness of humans (Fumagalli, Rezaei, and Salomons 2022; Haesevoets et al. 2021; Longoni, Bonezzi, and Morewedge 2019). In addition, individuals seem to rely on AI advice in situations of high personal importance (Saragih and Morrison 2022), high uncertainty (Altintas, Seidmann, and Gu 2023), and increasing task difficulty (Bogert, Schecter, and Watson 2021; Walter, Kremmel, and Jäger 2021). In terms of the distribution of agency in decision-making, although employees are hesitant to completely hand over control of their decisions, they are willing to rely on AI advice, when they retain the ultimate decision authority (Haesevoets et al. 2021). Dietvorst, Simmons, and Massey (2018) show that managers are willing to accept AI advice when they have majority control, with 70% human input and 30% AI input. Remarkably, AI systems that incorporate more social cues tend to positively influence individuals' preferences toward AI advice, eliciting responses similar to those typically observed in human–human interactions (Jussupow, Benbasat, and Heinzl 2024).

As for individual differences, when employees have high confidence in their own abilities to perform a task successfully, they are less likely to solicit and follow AI advice (Logg, Minson, and Moore 2019; Snijders et al. 2022). Similarly, when individuals have professional expertise in a particular decision-making domain, they are more inclined to disregard AI advice (Burton, Stein, and Jensen 2020; Thurman, Lewis, and Kunert 2019). In general, individuals exhibit high levels of trust in AI teammates, often following their advice even when provided with minimal information about how they operate (Gillath et al. 2021; Kennedy, Waggoner, and Ward 2021; You, Yang, and Li 2022). Knowledge about AI as well as experience in using it for completing tasks has been demonstrated to enhance the likelihood of AI convergence (Burton, Stein, and Jensen 2020; Chong et al. 2022; Yeomans et al. 2019).

Finally, the quality of AI advice is another crucial aspect of AI convergence. Employees are more inclined to accept AI advice that has proven highly accurate in the past (Sergeeva et al. 2023). Conversely, when an AI provides incorrect advice, it makes human teammates doubt the AI to a greater extent compared with when fellow human teammates make easily identifiable mistakes (Madhavan, Wiegmann, and Lacson 2006). Providing appropriate explanations for AI-assisted decision-making enhances AI convergence, regardless of the correctness of AI advice (Bansal et al. 2021; Lai and Tan 2019; Miller 2019). However, Sergeeva et al. (2023) found that more than half of individuals still adjusted their decisions based on AI advice, even in the absence of clear, plausible, or convincing explanations. Importantly, current literature on human–AI teaming lacks differentiation between optional or mandatory AI advice and does not explore the relationship between these types of advice and AI convergence.

In summary, although numerous studies examine and contrast the likelihood of advice acceptance based on the source (human vs. AI), context, and individual differences (e.g., Himmelstein and Budescu 2023; Jussupow, Benbasat, and Heinzl 2024), current research on human–AI teaming has largely overlooked how individuals respond to congruent or incongruent signals from human and AI teammates. A notable exception is a study by Xu, Benbasat, and Cenfetelli (2020) that investigates how the convergence of recommendations from various sources (AI, human experts, and nonexperts) influences AI convergence, finding that experts recommendations that were congruent with AI were more likely to lead to AI convergence than nonexpert recommendations. Ultimately, this research contributes to this growing literature by investigating how AI signals heuristics (observability and fit) and the (in)congruency of signals across human and AI teammates influence decision-making and the likelihood of AI convergence in different contexts.

3 Theoretical Background and Hypotheses

3.1 Signaling Theory and AI Convergence in Human–AI Teams

To investigate AI convergence in organizational settings, we apply signaling theory (Connelly et al. 2011; Drover, Wood, and Corbett 2018). Signaling theory has demonstrated a significant impact across various areas of organizational studies, including strategic management (Bergh et al. 2014; Park and Patel 2015; Plummer, Allison, and Connelly 2016), human resource management (Guest et al. 2021; Wang, Zhang, and Wan 2021), entrepreneurship (Allison, McKenny, and Short 2013), leadership (Appels 2022; Matthews et al. 2022), diversity initiatives (Leslie 2019), and crowdfunding (Kleinert and Mochkabadi 2022; Steigenberger and Wilhelm 2018). Signaling theory emerged from economics, finance, and cognitive psychology and is useful for investigating behaviors in an environment characterized by uncertainty and information asymmetries (Adam et al. 2022). Unlike most studies utilizing signaling theory to investigate signals as means of external communication, we apply signaling theory to the team context to explain how team members' attributes and actions transmit signals within a team and how those signals can establish credibility and consensus between human and AI team members.

AI systems can emulate complex human behavior, reasoning, and learning (Russell and Norvig 2003). To make decisions in human–AI teams (Bankins et al. 2024), information is distributed within the team through individual signals and signal sets (Drover, Wood, and Corbett 2018). Signaling is especially important in human–AI teaming because AI does not have agency, mutual trust, or emotions that traditional forms of team coordination rely on (Mathieu et al. 2017; Troth et al. 2012). Therefore, the signals sent by AI team members are the primary means by which AI contributes in human–AI teams. Beyond the potential impact of AI signals, we argue that our understanding of the mechanisms governing how employees' decisions align with signals from both human and AI sources in human–AI teams remains incomplete, necessitating a nuanced application of signaling theory.

In human–AI teams, convergence between human and AI team members on critical decisions can manifest in various forms, and following the suggestion of AI is not a given (Marti, Lawrence, and Steele 2024). Three distinct patterns emerge in human–AI teams: (1) human teammates may align and converge with an AI teammate on a specific signal, decision, or evidence; (2) human teammates may diverge with an AI teammate; and (3) in teams involving multiple individuals and an AI, there may be a mixture of convergence and divergence with AI across human teammates. We draw on the cognitive processing approach to signals established by Drover, Wood, and Corbett (2018) to investigate how signal heuristics, signal sets, and volitional action influence the patterns of AI convergence within teams.

To begin, the underlying assumptions of signaling theory are critical to understanding when and how AI convergence in human–AI teams is possible. Signaling theory's impact comes from its ability to explain what does (and what does not) constitute an effective signal. Likewise, signaling theory identifies the situations where a signaling perspective holds the most value. Ultimately, effective signals reduce the uncertainty that exists when two or more entities have different information, a fair assumption when considering the nature of predictive and generative AI.

At a minimum, for signals to be effective in teams, three things must happen. First, signals must catch the attention of team members or be seen and processed at some minimum threshold. Second, signals must provide useful, relevant information that the team needs. Third, the situation must include a level of uncertainty or missing information that a signal can help clarify (Connelly et al. 2011; Drover, Wood, and Corbett 2018). In other words, in human–AI teams, when completing tasks that cannot be accomplished with perfect certainty, individuals have two paths they can walk. First, they can take a risk and charge forward with a decision without clarity or certainty. Second, they can seek out and process relevant signals in their environment that make their task clearer and reduce uncertainty before they make a decision.

Logistically, signaling in human–AI teams contains three core elements: sender(s) (team members, be they human or AI conveying the results of their analysis and prescribing a course of action), receiver(s) (team members with ultimate authority to take an action), and signals (observable cues that convey useful information to decision-makers) (Spence 1973). Previous research suggests that there are two main characteristics of individual signals: observability and fit, which influence their impact (Connelly et al. 2011). Signal sets can be congruent or incongruent depending on whether signals convey the same information or not, and they combine to create the collective positive or negative valence of a signal set.

In human–AI teams, signal observability refers to the extent to which team members notice a signal (Connelly et al. 2011). If too many signals are sent in a team at once, or a signal is not readily observed by team members, then it is unlikely that a signal will have any impact. Logically, it follows that if an AI signal is unobservable, goes unnoticed, or is ignored, AI convergence would be independent of the AI team member and will trend toward chance or unrelated factors.

Signal fit describes the extent to which a signal can truthfully minimize information asymmetries and reduce uncertainty for a specific team member (Bafera and Kleinert 2022; Vanacker et al. 2020). This concept is important in understanding situations when a signal may not actually be an accurate representation of underlying value such as in an unreliable signal or dishonest signal (Busenitz, Fiet, and Moesel 2005; Cohen and Dean 2005; Davila, Foster, and Gupta 2003). Historically, the literature has used various terms to identify the extent to which a signal fits, is credible, and corresponds to quality, including the terms signal strength, intensity, and clarity (for a review, see Connelly et al. 2011).

Two elements of human–AI teaming are important to recognize when it comes to signal fit. First, bias in training data for algorithms can create variability in signal fit depending on the context of the task (Bolukbasi et al. 2016; Buolamwini and Gebru 2018; Çalışkan, Bryson, and Narayanan 2017; Grother, Ngan, and Hanaoka 2019). Therefore, AI signals may be more or less credible depending on the task being solved, and this may be known or unknown by human team members. Second, team members may calibrate signals differently, giving a signal with different perceived fit or meaning within a team (Branzei et al. 2004).

Whereas observability is about a human team member noticing signals from an AI team member, signal fit is largely about how AI signals are interpreted and translated into meaning. Previous research theoretically distinguishes signals with strong and weak fit (Connelly et al. 2011) and proposes that strong signals (those with strong fit) are more likely to evoke a receiver's desired judgmental confidence (a threshold that must be crossed to make a decision) through heuristic processing as human receivers go through an automatic cognitive process of signal interpretation (Drover, Wood, and Corbett 2018). A strongly fitting signal sent by an AI teammate would be known to be reliable and aligned with relevant information from other sources. For example, if an AI were to suggest a performance rating of an employee that is consistent with their previous years' score and the score provided by their immediate supervisor, the confirmation of that score would move swiftly. In other words, signals with strong fit, and no information to the contrary, will largely be noticed and processed automatically when they are present (Drover, Wood, and Corbett 2018).

In contrast, signals with weak fit make it hard for receivers to automatically interpret information and reach the desired judgmental confidence threshold, and therefore, systematic processing is necessary (Drover, Wood, and Corbett 2018). A signal with weak fit sent by an AI teammate is one that contradicts some other relevant piece of information as would be the case if the algorithm above suggested a performance score that was dramatically different than that suggested by the supervisor. If a signal's fit is too weak, receivers may deem its interpretation and the attainment of the desired judgmental confidence threshold as impossible, abandoning any type of cognitive information processing and interrupting effective decision-making.

Applying these concepts to human–AI teams, strong signal fit and credibility from an AI team member should trigger heuristic processing and thus increase the chance of AI convergence in the form of accepting the AI advice. This reasoning leads to the following hypothesis:

Hypothesis 1.In human–AI teams, AI convergence is more likely when a signal sent by an AI has strong signal fit versus when the signal has weak signal fit.

3.2 Optional AI and AI Convergence

Previous research on human–AI teaming in organizational settings suggests that the distribution of agency is a pivotal factor that significantly impacts AI convergence in the workplace (Agrawal, Gans, and Goldfarb 2019; Chugunova and Sele 2022; Gagné et al. 2022; Grundke 2024). In particular, humans seem to be particularly averse to AI tools that make decisions independently and do not allow human control, especially in contexts with moral implications and high levels of uncertainty (Dietvorst, Simmons, and Massey 2015; Dietvorst and Bharti 2020; Jussupow, Benbasat, and Heinzl 2020; Longoni, Bonezzi, and Morewedge 2019). However, embedding human autonomy and control into human–AI collaborations may mitigate algorithmic aversion and even make people appreciative of AI team members' recommendations (Bigman and Gray 2018; Chugunova and Sele 2022; Gagné et al. 2022; Logg, Minson, and Moore 2019).

This logic was reinforced in a recent review by Gagné et al. (2022) that outlined how mandatory AI, especially without careful consideration of employee needs and interpretation, can hinder the effective implementation of AI technology in the workplace. As teams and work are designed to incorporate new technology, Gagné et al. (2022) suggest that the employee needs for autonomy, competence, and relatedness (Deci and Ryan 1985) will determine how motivated human team members are to converge with AI team members. Empirical evidence suggests that this may be related to the phenomenon of algorithm appreciation (i.e., when humans are motivated to pay attention to AI advice, make use of it, and may even prefer it to human advice). When a task is perceived as objective or analytical, individuals feel more satisfied converging with AI (Chugunova and Sele 2022).

Ultimately, AI convergence is only possible when human team members notice a signal from an AI team member (Connelly et al. 2011). Combining the self-determination theory (Deci and Ryan 1985) with signaling theory (Drover, Wood, and Corbett 2018), we argue that the option to choose whether to see a signal from an AI teammate will help satisfy an individual's need for autonomy (the sense of being agents of their own behavior rather than mere pawns of external pressures, as discussed by Gagné et al. 2022), and therefore increase the likelihood of AI convergence. However, this prediction is reliant not only on employees having autonomy but exercising that autonomy to collaborate with their AI team members. Self-determination theory also posits that beyond the need for autonomy, which can be fulfilled by the ability to choose to see AI advice, employees also need to satisfy a need for competence—a feeling of effectiveness and mastery over their environment (Deci and Ryan 1985; Gagné et al. 2022). When an employee faces uncertainty and chooses to see signals from their AI teammate to enhance their mastery of the environment, their need for competence will be satisfied by the AI advice, thereby making AI convergence more likely. In contrast, when they choose to ignore or eschew their AI teammate, AI convergence will be mitigated.

In summary, we suggest that when employees choose to see AI advice (optional AI advice), rather than being forced to see it (mandatory AI advice), an AI signal takes on two important qualities. First, employees have chosen to attend to a cue in their environment and have exerted agency to satisfy their need for autonomy. Second, the signal becomes costlier as employees now have made a choice to observe and attend to a signal they could have ignored, and their need for competence becomes satisfied from processing the extra information. Taken together, we argue that in human–AI teams, optional AI advice, when observed, should increase AI convergence. This reasoning leads to the following hypothesis:

Hypothesis 2.In human–AI teams, AI convergence is more likely when observing the AI signal is optional versus when observing the AI signal is mandatory, given human team members choose to see AI advice.

3.3 Signal Sets and AI Convergence

To this point, we have considered the processes of becoming attentive to individual signals that are either optional or have strong or weak fit. Interestingly, signal receivers may not always interpret a set of signals in line with the message intended by the sender, especially when experiencing high levels of uncertainty (Bergh et al. 2014). As signals become more complex, various signals and their components can become bundles that are interpreted together as sets. Such signal sets produce signal set valence, which is defined as “the collective volume and strength of the selected organizational signals and their assigned weight” (Drover, Wood, and Corbett 2018, 218). In other words, when facing information asymmetry and uncertainty, employees will have to make inferences from multiple cues or embedded elements that have different pieces of information (Drover, Wood, and Corbett 2018). Like signals, signal sets can have an array of qualities that make them more or less effective in reducing uncertainty and improving employee confidence in their decisions. There are three possible configurations of signal set congruence: (1) uniform congruence (represents a signal set with either positive or negative signals, depicting a uniform and consistent positive or negative valence across components of the set); (2) imbalanced incongruence (a signal set consists of both positive and negative signals, and the signal set valence is positively or negatively skewed); and (3) balanced incongruence (a signal set comprises both positive and negative signals with equal competing valences) (Drover, Wood, and Corbett 2018).

In human–AI teams, a signal set includes the prescriptions forwarded by the AI and the prescriptions forwarded by a human team member. When these components form a signal set that is congruent (i.e., where the human and the AI team members both signal the same decision), there will be enhanced AI convergence. When signals create a signal set that is incongruent (i.e., where the human and the AI team members both signal different decisions), AI convergence will be less likely. As repeated signals have been shown to increase signal impact (Connelly et al. 2011), we believe this effect will hold regardless of whether signals have strong fit or whether observing AI signals is chosen and not mandatory. Based on these arguments, we hypothesize the following:

Hypothesis 3.In human–AI teams, AI convergence is more likely when the signal set created by human and AI team members is congruent versus incongruent.

3.4 Research Accountability

Following best research practices, we preregistered Hypotheses 1 and 2 before any data collection started. Hypothesis 3 emerged in response to excellent reviewer feedback and was not preregistered. Findings are replicated across several samples (four studies total) in fully independent samples from employees and leaders working in multiple countries, industries, and roles. To ensure the validity of our experiments, participants from each study were excluded from participating in the following studies using Prolific ID exclusion lists. Research for this paper was approved by the IRB of one of the author's institutions. In addition to this, we have provided all data and code used to produce the results. This includes a transparent data cleaning process where we upload the full dataset with two key cleaning variables. First, a dummy variable identifies which rows and participants are to be dropped from the analysis. Second, we include a column that identifies the reason each row was dropped. Hypotheses were tested using the “melogit” command in Stata 16 with random intercepts based on participant ID. Data and code can be found on the OSF repository: https://osf.io/zjwta/?view_only=74a15f71b21c4b0abb69f3e5de543537 and our preregistration can be found here: https://osf.io/yt5mc/?view_only=f5efa8fc9d2041f4a44dd4b6fc7f6bfe.

4 Research Methods

4.1 Study 1 Method

4.1.1 Participants

We recruited an initial sample of 304 employees from the United Kingdom and the United States to take part in our study using the Prolific platform. Participants were recruited until 300 had completed the experiment without failing attention checks. Participants had to be at least 18 years of age, be employed part-time or full-time, and have a good command of English. A total of 304 participants followed the link to participate in the study. Prior to participants proceeding to the main study, they were provided a tutorial specific to the condition they were randomly assigned to. Participants were able to practice the task with two sample image pairings. We removed four participants who failed to provide consent or failed process checks by not recalling basic information after the tutorial. This resulted in 300 individuals who were allowed to proceed to the full experiment and were paid 2.90 Pounds in exchange for their time. During the experiment, an additional 20 participants failed attention checks and were omitted from the analysis. This sample size surpassed the power requirements to detect a medium effect (ηp2 = 0.25; Cohen 1988) with 80% power. To ensure the generalizability of our findings and adhere to equitable research practices (Offenwanger et al. 2021), we aimed for a balanced sample in terms of gender and race because of the nature of the task, which involved matching faces of Black and White men and women. The participants were on average 42.835 years old (SD 14.37). Approximately 51% of our participants identified as female and 49% of participants identified as male. Approximately 50.36% of participants identified as Black and 48.64% identified as White.

4.1.2 Procedure

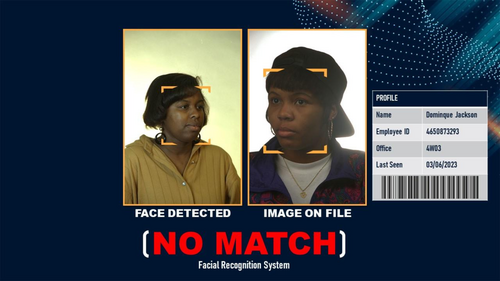

Each participant was randomly assigned into one of four experimental conditions following a 2 (signal fit: strong versus weak) × 2 (AI advice: optional versus mandatory) between-subject design. Within each condition, participants completed a series of face-matching tasks in an employee identification scenario. Participants were instructed to play the role of a security officer who needs to identify if a person attempting to enter a highly secure facility is indeed a current employee with clearance to enter the building. The experimental setup was a two-step process. In the first step, participants were asked to review the image of the person seeking entry and compare this image to the most similar image in the employee image database deciding to grant or deny entry. In the second step, depending on their condition, participants were sent a signal with strong or weak fit and a signal of optional or mandatory AI advice. Participants had to decide whether the two images were a match (i.e., grant entry) or not a match (i.e., deny entry). In total, participants evaluated a series of 24 pairs of pretested images of human faces that were evenly balanced between races (Black vs. White) and gender (female vs. male). After evaluating each pair of images, participants reported their level of confidence. After finishing the facial recognition task, participants went through a series of demographic questions and additional measures (e.g., explicit gender bias).

The pairs of facial images employed in Study 1 were designed using the color version of the Facial Recognition Technology (FERET) database (Phillips et al. 1998). To select pairs of images for the experiment, the photos were initially evaluated by a face application programming interface (API) that used an algorithm to produce a similarity score between 0 (not similar at all) and 1 (very similar). However, the API had difficulties in creating viable pairs of images for Black men and women in comparison with White men and women. Therefore, images for Black men and women were selected manually from the database rather than using the algorithmically generated scores. To ensure a similar level of difficulty for the selected images, we conducted a preliminary study. We recruited 160 participants on Prolific balanced by gender (men/women) and race (Black/White). Participants were asked to determine if the pairs of Black/White men and women images were a “match” or “no match” and to rank their confidence after each pair of images. No other information was provided. Based on the difficulty of the images (e.g., how many times the pairs of images were matched or not matched correctly) and the confidence ratings, we chose 24 images (six images for White men, six images for White women, six images for Black men, and six images for Black women) of equal difficulty to be used in the main experiment. Results of this analysis can be provided upon request.

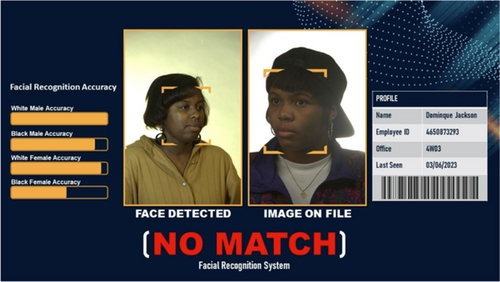

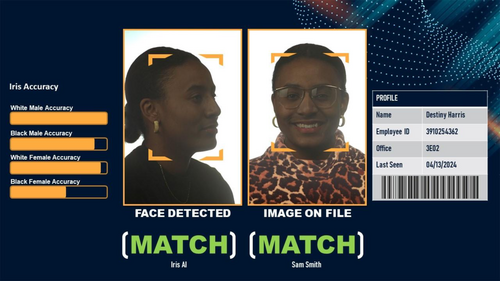

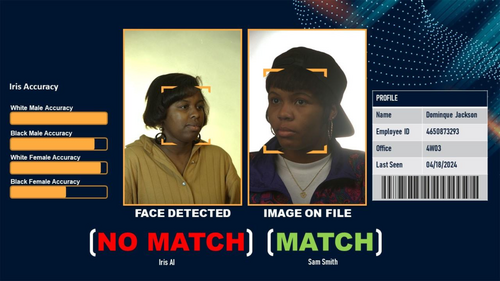

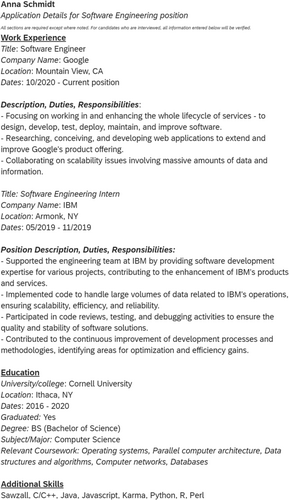

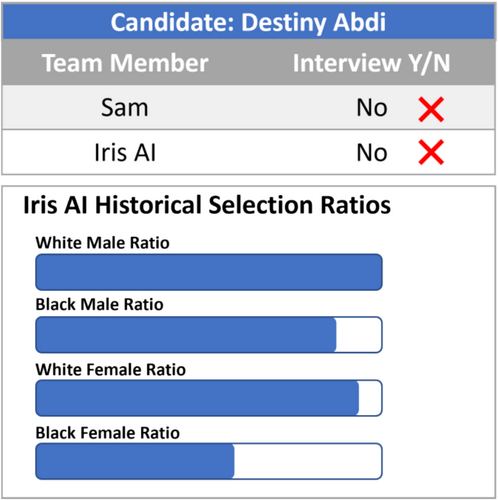

4.1.2.1 Manipulation of Signal Fit

In the strong signal fit (coded as 0), participants were presented with a pair of images next to an employee badge as well as the decision made by the AI tool (facial recognition system), shown as match or no match (see Figure 1). In the weak signal fit (coded as 1), participants were shown the AI decision (match or no match) plus additional information about the level of accuracy of the AI for different demographics (White Male/Black Male/White Female/Black Female) represented as bar graphs (see Figure 2). This information weakened the signal by limiting the credibility of AI advice. Visual representation for the AI accuracy for different demographics was derived from previous research on gender and racial disparity from facial recognition system vendors (Buolamwini and Gebru 2018; Grother, Ngan, and Hanaoka 2019). χ2 tests revealed that participants accurately recalled whether they were provided with AI accuracy (χ2 = 100.116, p = 0.000). Logistic regressions also showed that only the variable capturing the weak fit signal condition was a significant predictor of recalling that participants had information about AI accuracy (p = 0.000). There was no effect of the optional AI advice (p = 0.640) condition or the interaction between optional AI advice and signal fit (p = 0.653).

4.1.2.2 Manipulation of Optional AI Advice

In the optional AI advice condition (coded as 1), participants were asked if they would like additional assistance from the AI to help them make their decision. If participants opted to receive AI advice, they were prompted with information depending on the condition they were in (strong signal fit–AI match/no match vs. weak signal fit–AI match/no match plus AI accuracy for different demographics). If participants did not opt for AI advice, they received no information from the facial recognition system and made an unaided decision for that image pair. In the mandatory AI condition (coded as 0), participants were not asked if they would like additional assistance. Instead, they were automatically presented with either a strong fit or weak fit signal, depending on their condition. χ2 tests revealed that participants accurately recalled whether they had the option to solicit AI advice (χ2 = 76.786, p = 0.000). Logistic regressions also showed that only the variable capturing the optional AI advice condition was a significant predictor of recalling that participants had the option to solicit AI advice (p = 0.000). There was no effect of the signal fit (p = 0.413) condition or the interaction between optional AI advice and signal fit (p = 0.778).

4.1.3 Measures

4.1.3.1 Grant/Deny Access

For each image pair, participants were asked to either grant or deny access if they believed the pairs of images to be a match (or mismatch), respectively. Each match/grant decision was captured using a dichotomous variable where 1 = match/grant access and 0 = mismatch/deny access.

4.1.3.2 Confidence in Decision

Following each decision, participants reported their level of confidence in their decision using a sliding scale ranging from 0 to 100, with 0 being not confident at all to 100 being very confident in decision.

4.1.3.3 Decline to Solicit AI Advice

For participants in the optional AI advice condition, a dichotomous variable captured whether or not participants chose to obtain additional assistance from the facial recognition system. This variable was coded as a 0 if they selected “YES—I would like to solicit the facial recognition system as I am not very confident in my current decision” and 1 if they selected “NO—I am confident in my decision and do not require further assistance.”

4.1.3.4 AI Convergence

A dichotomous variable captured whether or not each participant's match/grant access decision was the same as the advice provided by the AI. A value of 1 indicated that the employee converged with the AI, making either the same grant access decision (when the pairs were indeed a photo of the same person) or the same deny access decision (when the photo pairs included images of different individuals). When participants diverged from the AI, the value of this dichotomous variable was set as 0.

4.1.3.5 AI Knowledge

To evaluate participants' knowledge of AI, we modified a measure developed by Maier, Jussupow, and Heinzl (2019). Participants were asked to report their level of understanding, level of interaction, and level of interest in regard to facial recognition systems using a slider from 0 (“I've never heard of facial recognition systems”) to 100 (“I've heard a lot about facial recognition systems”).

4.1.3.6 Trust in AI

Trust in AI was measured using a scale developed by Merritt (2011) and modified to focus on facial recognition systems. Sample items include, “I believe facial recognition systems are competent performers,” “I have confidence in the advice given by facial recognition systems,” and “I can rely on facial recognition systems to do its best every time I take its advice.” Items range from 1 (strongly disagree) to 5 (strongly agree) (α = 0.87).

4.1.3.7 Explicit Gender Bias

Explicit gender bias was measured using a scale developed by Swim et al. (1995). Sample statements include “On average, people in our society treat men and women equally” and “Society has reached the point where women and men have equal opportunities for achievement.” Items range on a Likert scale from 1 (strongly disagree) to 7 (strongly agree) (α = 0.85).

4.1.3.8 Explicit Racial Bias

Explicit racial bias was measured using a scale developed by Uhlmann, Brescoll, and Machery (2010). Sample statements include “If your personal safety is at stake, it's sensible to avoid members of ethnic groups known to behave more aggressively” and “Law enforcement officers should act as if members of all racial groups are equally likely to commit crimes.” Items range on a Likert scale from 1 (strongly disagree) to 7 (strongly agree) (α = 0.76).

4.1.3.9 Demographics

We collected data on age, gender, race, level of education, employment status, earned income, work sector, country of residency, community (e.g., urban, suburban, and rural), and level of English proficiency.

4.2 Study 1 Results

Table 1 displays the means, standard deviations, and descriptive statistics of our study variables. Hypothesis 1 suggested that a strong signal fit versus a weak signal fit from AI would increase AI convergence in a high-stakes scenario. Hypothesis 2 suggested that having the option to get AI advice would likewise increase AI convergence, given that a human chooses to see the optional AI advice. We tested these hypotheses using a mixed effects logistic regression command “melogit” in Stata 16 (Statacorp 2019) with random intercepts for each participant. The nature of the data, which involve repeated binary decisions nested within participants, required the use of multilevel logistic regression to address issues of nonindependence and our binary dependent variable (see Guo and Zhao 2000 for a review). We first examined the relationships between the main effects of our experimental conditions, comparing the AI convergence of participants who had received a strong versus weak signal fit, as well as those who could or could not choose to solicit optional AI advice. Next, we added a dummy variable capturing whether participants chose to see AI advice.

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | AI convergence | 0.83 | 0.38 | 1.00 | ||||||||||

| 2 | Weak signal fit | 0.50 | 0.50 | −0.03 | 1.00 | |||||||||

| 3 | Optional AI | 0.52 | 0.50 | −0.06 | 0.05 | 1.00 | ||||||||

| 4 | Decline to solicit | 0.16 | 0.37 | −0.28 | 0.08 | 0.42 | 1.00 | |||||||

| 5 | Female | 0.51 | 0.50 | −0.01 | −0.11 | 0.01 | 0.04 | 1.00 | ||||||

| 6 | Black participant | 0.50 | 0.50 | −0.03 | 0.01 | 0.01 | 0.11 | 0.03 | 1.00 | |||||

| 7 | AI knowledge | 55.58 | 20.93 | 0.00 | −0.12 | −0.03 | 0.07 | 0.02 | 0.30 | 1.00 | ||||

| 8 | Trusting disposition | 4.66 | 1.42 | 0.04 | −0.16 | −0.06 | −0.06 | 0.03 | −0.11 | 0.11 | 1.00 | |||

| 9 | Human–computer trust | 3.13 | 0.83 | 0.06 | −0.05 | 0.02 | 0.05 | −0.07 | 0.10 | 0.38 | 0.18 | 1.00 | ||

| 10 | Explicit racism | 2.70 | 1.22 | −0.01 | −0.02 | −0.10 | −0.03 | 0.00 | −0.03 | 0.11 | −0.12 | 0.20 | 1.00 | |

| 11 | Explicit sexism | 3.33 | 1.26 | −0.02 | −0.05 | −0.12 | −0.01 | −0.02 | −0.07 | 0.15 | 0.09 | 0.26 | 0.34 | 1.00 |

- Note: N Level 2 = 280; N Level 1 = 6720. All correlations above 0.025 in absolute value are significant at p < 0.05.

For the signal fit, results show that there was no significant effect of a strong signal fit on the likelihood of AI convergence (Odds ratio = 0.797, p = 0.161). For optional AI advice, results show a significant negative effect on AI convergence when AI was optional versus when it was mandatory (Odds ratio = 0.630, p = 0.005). Next, we added an additional covariate to our model, which captured whether or not participants who had the option to solicit AI advice actually chose not to see it. Results show that when accounting for whether or not a participant actually chose to see optional AI advice, the effect of signal fit remained unchanged (Odds ratio = 0.884, p = 0.413). Thus, Hypothesis 1 was not supported as the AI signal fit did not impact AI convergence.

In contrast, when accounting for whether a participant actually chose to see optional AI advice, the effect of having the option to solicit AI advice became positive, increasing the odds of convergence (Odds ratio = 1.508, p = 0.011), whereas the effect of choosing not to see the AI advice had a large negative effect, decreasing the odds of making the same decision as the AI (Odds ratio = 0.126, p = 0.000). Thus, Hypothesis 2 was partially supported with the caveat that the participants' choice to actually solicit AI advice was a critical factor in determining the impact of optional AI. Importantly, for interpreting our results in light of Hypothesis 2, controlling for whether or not one exercises the choice to see AI advice leads to the interpretation that ceteris paribus, having the option does have a strong, positive impact on taking AI advice but this is contingent on the participant seeing the AI advice.1

4.2.1 Supplementary Analyses

We tested the interaction between the two main effects. We found no interaction between signal fit and optional AI advice on AI convergence by human teammates (signal fit main effect: Odds ratio = 0.832, p = 0.404; optional AI advice main effect: Odds ratio = 1.422, p = 0.114; interaction: Odds ratio = 1.120, p = 0.706). Given that the results of our study were unexpected, we conducted a series of abductive robustness checks and supplementary analyses to better understand the nature of the data and of our experiment. Critically, we measured participants' confidence in each decision they made as this has been shown to be a key driver in AI-supported decision-making (Chong et al. 2022). Although not originally preregistered, any confidence level less than 100% would suggest participants experience uncertainty, which is essential under signaling theory. Therefore, we controlled for participants' level of confidence and other variables that would lend support for our theorizing. In these analyses, we explored whether confidence, explicit racial bias, explicit gender bias, participant gender, participant race, and trust in AI (a component of algorithmic appreciation) altered the effects of our experiment. Results of this analysis show no qualitative difference in our pattern of results when adding these controls; however, participant's racial bias had a negative impact on AI convergence, whereas confidence and trust in AI significantly improved the chance that employees took AI team member's advice (see Tables 2 and 3).

| Model 1 main effects only | Model 2 main effects plus solicit decision | Model 3 interaction plus solicit decision | ||||

|---|---|---|---|---|---|---|

| Variable | Odds ratio | SE | Odds ratio | SE | Odds ratio | SE |

| Weak signal fit | 0.80 | 0.13 | 0.88 | 0.13 | 0.83 | 0.18 |

| Optional AI advice | 0.63** | 0.1 | 1.51* | 0.24 | 1.42 | 0.32 |

| Decline to solicit | 0.13** | 0.01 | 0.13** | 0.01 | ||

| Weak signal fit × Optional AI advice | 1.12 | 0.34 | ||||

| Intercept | 9.99** | 1.49 | 9.01** | 1.24 | 9.28** | 1.48 |

| var (participant ID) | 1.37 | 0.19 | 1.10 | 0.16 | 1.10 | 0.16 |

- Note: N Level 2 (Participants) = 280; N Level 1 (Observations) = 6720.

- ** p < 0.01.

- * p < 0.05.

- † p < 0.1.

| Model 1 main effects only | Model 2 main effects plus solicit decision | Model 3 interaction plus solicit decision | ||||

|---|---|---|---|---|---|---|

| Variable | Odds ratio | SE | Odds ratio | SE | Odds ratio | SE |

| Weak signal fit | 0.78 | 0.13 | 0.90 | 0.13 | 0.88 | 0.20 |

| Optional AI advice | 0.55** | 0.09 | 1.48* | 0.24 | 1.46† | 0.34 |

| Decline to solicit | 0.09** | 0.01 | 0.09** | 0.01 | ||

| Weak signal fit × Optional AI advice | 1.03 | 0.31 | ||||

| Confidence in decision | 01.01** | 0.00 | 1.03** | 0.00 | 1.03** | 0.00 |

| Female participant | 0.89 | 0.16 | 0.96 | 0.15 | 0.96 | 0.14 |

| Black participant | 0.72† | 0.12 | 0.82 | 0.13 | 0.82 | 0.13 |

| Explicit racial bias | 0.94 | 0.07 | 0.94 | 0.06 | 0.94 | 0.06 |

| Explicit gender bias | 0.91 | 0.06 | 0.93 | 0.06 | 0.93 | 0.06 |

| AI knowledge | 0.99 | 0.00 | 1.00 | 0.00 | 0.99 | 0.00 |

| Trust in AI system | 1.40** | 0.15 | 1.35** | 0.14 | 1.33** | 0.14 |

| Intercept | 2.00 | 0.86 | 0.81 | 0.32 | 0.82 | 0.35 |

| var (participant ID) | 1.29 | 0.18 | 1.02 | 0.15 | 1.02 | 0.15 |

- Note: N Level 2 (Participants) = 280; N Level 1 (Observations) = 6720.

- ** p < 0.01.

- * p < 0.05.

- † p < 0.1.

4.3 Study 1 Discussion

The results of Study 1 were quite surprising. Hypothesis 1 was not supported suggesting that signal fit did not impact human employees' tendency to converge with AI in their decisions. On its own, signal fit does not appear to play a major role in the AI convergence in the workplace, at least not in a dyadic partnership in the facial recognition system context. That said, the results supported Hypothesis 2 suggesting that an optional AI signal is crucial for the effective human–AI teaming in organizational settings. The effect of optional AI became positive when controlling for whether receivers choose to see AI advice or not. This is an important finding in regard to the design of human–AI teams that reinforces the “augmentation thesis,” while favoring a collaborative model of hybrid decision-making. Ultimately, these data suggest that we may need to reject the oppositional framing of AI versus human decision-making to optimize effective human–AI teams. Finally, the effect of optional AI was independent of the signal fit about the AI partner's signal that was sent to receivers.

The results of Study 1 demonstrated clearly that we needed to replicate our findings. It is possible that the data represented a one-time finding that was idiosyncratic to the specific data set. Beyond this, we determined that it was necessary to address a potential confound in our experimental design. Namely, in Study 1, we asked participants in the optional AI condition to solicit optional AI advice only if they were not confident in their initial decision (see Solicit AI Advice measure). Although this design is aligned with the stage of attending to observable signals as well as with the stage of signal interpretation described by signaling theory, it nevertheless could be the case that we introduced demand effects, or otherwise altered the behavior of participants by making confidence directly tied to the decision to see AI advice in the optional AI conditions.

4.4 Study 2 Method

4.4.1 Participants and Procedure

We followed the same procedures for Study 2 as in Study 1 with two exceptions. For Study 2, we constrained participant location to be within the United States. We also removed any language about confidence when asking participants in the optional AI conditions if they wanted to see optional AI advice. A total of 296 participants followed the link to participate in the study. We removed seven participants who failed to provide consent or failed to recall basic information after the tutorial (process checks). This resulted in 289 individuals interested who were allowed to proceed to the full experiment and were paid 2.90 pounds in exchange for their time. These participants were on average 44.18 years old (SD 13.26). Approximately 48.6% of our participants identified as female, and 51.4% of participants identified as male. Approximately 49% of participants identified as Black and 51% identified as White.

4.4.2 Measures

The same measures that were used in Study 1 were used in Study 2 with one exception. Participants in the optional AI conditions were asked if they wanted to see optional AI advice and given either YES or NO to answer. The internal consistency of our measures was adequate (α Trust in AI = 0.94; α Explicit Gender Bias = 0.91; α Explicit Racial Bias = 0.78).

4.4.3 Manipulation Checks

Like in Study 1, χ2 tests revealed that participants accurately recalled whether they had the option to solicit AI advice (χ2 = 83.164, p = 0.000) and the signal fit (χ2 = 74.176, p = 0.000). Logistic regressions also showed that only the variable capturing each experimental condition was a significant predictor of recalling information about that condition (p = 0.000 in each case). When recalling whether there was optional AI advice, there was no effect of signal fit (p = 0.451) or the interaction between optional AI advice and signal fit (p = 0.472). The parallel check for the signal fit condition followed the same pattern. There was no effect of the optional AI advice (p = 0.279) condition or the interaction between optional AI advice and signal fit condition (p = 0.985).

4.5 Study 2 Results

Table 4 displays the means, standard deviations, and descriptive statistics of our Study 2 variables. For the second time, results show that for signal fit, there was no significant effect of additional information that weakened the signal on the likelihood of AI convergence (Odds ratio = 0.827, p = 0.218). Furthermore, for optional AI advice, results again show a significant, negative effect in the likelihood of AI convergence when AI advice was optional versus when it was mandatory (Odds ratio = 0.705, p = 0.024). Results again show the same pattern as in Study 1 when accounting for whether or not a participant actually chose to see AI advice. When controlling for a participant's decision to see AI advice when given the choice, the effect of signal fit remained nonsignificant (Odds ratio = 0.900, p = 0.475), whereas the effect of having the option to see AI advice became positive, increasing the odds of AI convergence (Odds ratio = 1.400, p = 0.034). Again, the effect of ignoring the option for AI advice had a large negative effect, decreasing the odds that participants made the decision as the AI suggested (Odds ratio = 0.159, p = 0.000). Thus, Hypothesis 1 was not supported, and Hypothesis 2 was supported for a second time.

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | AI convergence | 0.83 | 0.38 | 1.00 | ||||||||||

| 2 | Weak signal fit | 0.49 | 0.50 | −0.02 | 1.00 | |||||||||

| 3 | Optional AI | 0.53 | 0.50 | −0.04 | −0.02 | 1.00 | ||||||||

| 4 | Decline to solicit | 0.15 | 0.35 | −0.23 | 0.05 | 0.39 | 1.00 | |||||||

| 5 | Female | 0.49 | 0.50 | 0.05 | 0.02 | 0.01 | −0.01 | 1.00 | ||||||

| 6 | Black participant | 0.49 | 0.50 | 0.01 | −0.02 | 0.03 | 0.07 | 0.00 | 1.00 | |||||

| 7 | AI knowledge | 58.41 | 18.66 | 0.00 | 0.11 | −0.08 | 0.01 | −0.16 | 0.19 | 1.00 | ||||

| 8 | Trusting disposition | 4.21 | 1.60 | −0.03 | −0.03 | −0.08 | −0.09 | −0.12 | −0.18 | 0.16 | 1.00 | |||

| 9 | Human–computer trust | 3.09 | 0.87 | 0.08 | 0.09 | −0.04 | 0.02 | −0.13 | 0.13 | 0.32 | 0.21 | 1.00 | ||

| 10 | Explicit racism | 2.81 | 1.29 | −0.01 | 0.12 | −0.10 | 0.05 | −0.16 | −0.01 | 0.03 | −0.07 | 0.21 | 1.00 | |

| 11 | Explicit sexism | 2.99 | 1.43 | 0.02 | 0.05 | 0.05 | 0.07 | −0.26 | −0.05 | 0.02 | 0.03 | 0.30 | 0.50 | 1.00 |

- Note: N Level 2 = 289; N Level 1 = 6936. All correlations above 0.025 in absolute value are significant at p < 0.05.

4.5.1 Study 2 Supplementary Analysis

Similar to Study 1, we controlled for participants' level of confidence and other variables that would lend support for our theorizing. Results of this analysis again show no qualitative difference in our tests of Hypotheses 1 and 2 when adding the controls of confidence, trust in AI, racial bias, and gender bias (see Tables 5 and 6). Similar to Study 1, participants' confidence and participants' trust in their AI teammate enhanced the likelihood of AI convergence. Additionally, women participants were more likely to converge with AI advice compared with men. Finally, the interaction between signal fit and optional AI advice was not significant, similar to Study 1 (signal fit main effect: Odds ratio = 0.884, p = 0.568; optional AI advice main effect: Odds ratio = 1.372, p = 0.142; interaction: Odds ratio = 1.034, p = 0.909).

| Model 1 main effects only | Model 2 main effects plus solicit decision | Model 3 interaction plus solicit decision | ||||

|---|---|---|---|---|---|---|

| Variable | Odds ratio | SE | Odds ratio | SE | Odds ratio | SE |

| Weak signal fit | 0.83 | 0.13 | 0.90 | 0.13 | 0.88 | 0.19 |

| Optional AI advice | 0.70* | 0.11 | 1.40* | 0.22 | 1.37** | 0.3 |

| Decline to solicit | 0.16** | 0.02 | 0.16** | 0.02 | ||

| Weak signal fit × Optional AI advice | 1.03 | 0.31 | ||||

| Intercept | 9.09** | 1.3 | 8.45** | 1.15 | 8.52** | 1.35 |

| var (participant ID) | 1.27 | 0.17 | 1.11 | 0.15 | 1.11 | 0.15 |

- Note: N Level 2 (Participants) = 289; N Level 1 (Observations) = 6936.

- ** p < 0.01.

- * p < 0.05.

- † p < 0.1.

| Model 1 main effects only | Model 2 main effects plus solicit decision | Model 3 interaction plus solicit decision | ||||

|---|---|---|---|---|---|---|

| Variable | Odds ratio | SE | Odds ratio | SE | Odds ratio | SE |

| Weak signal fit | 0.78 | 0.12 | 0.83 | 0.12 | 0.78 | 0.17 |

| Optional AI advice | 0.62** | 0.10 | 1.36* | 0.21 | 1.27 | 0.28 |

| Decline to solicit | 0.11** | 0.01 | 0.11** | 0.01 | ||

| Weak signal fit × Optional AI advice | 1.13 | 0.33 | ||||

| Confidence in decision | 1.02** | 0.00 | 1.03** | 0.00 | 1.02** | 0.00 |

| Female participant | 1.44* | 0.23 | 1.46* | 0.22 | 1.46** | 0.22 |

| Black participant | 0.96 | 0.15 | 1.06 | 0.16 | 1.06 | 0.16 |

| Explicit racial bias | 0.97 | 0.07 | 1.03 | 0.07 | 1.03 | 0.07 |

| Explicit gender bias | 1.01 | 0.06 | 1.02 | 0.06 | 1.02 | 0.06 |

| AI knowledge | 0.99† | 0.00 | 0.99† | 0.00 | 0.99† | 0.00 |

| Trust in AI | 1.41** | 0.12 | 1.41** | 0.13 | 1.41** | 0.13 |

| Intercept | 0.96 | 0.37 | 0.44* | 0.17 | 0.45* | 0.18 |

| var (participant ID) | 1.16 | 0.16 | 1.01 | 0.14 | 1.01 | 0.14 |

- Note: N Level 2 (Participants) = 289; N Level 1 (Observations) = 6936.

- ** p < 0.01.

- * p < 0.05.

- † p < 0.1.

4.6 Study 2 Discussion

The results of Study 2 replicated Hypothesis 2 while removing potential demand effects inherent to the design in Study 1. Optional AI advice seemed to improve the likelihood of employees' convergence with AI, but only when participants chose to see the optional AI signal. Similar to Study 1, this effect appeared to be independent of signal fit. Thus far, our studies have tested our first two hypotheses in a dyadic human–AI partnership. Given this study design, we cannot speak to the context of AI teams, where there is more than one human employee working in a team that includes AI. Therefore, in Study 3, we extend our study design to include an additional signal sent from a human teammate.

4.7 Study 3 Method

4.7.1 Participants and Procedure

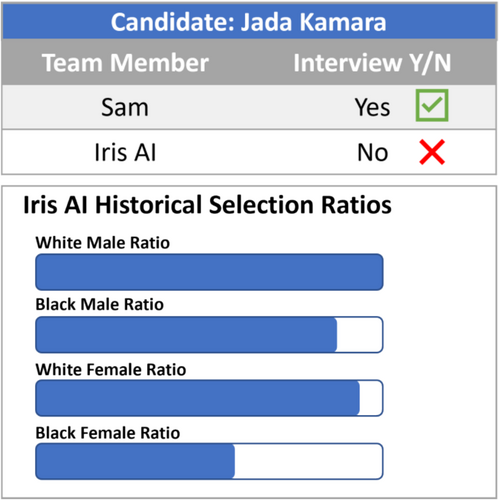

We followed the same procedures for Study 3 as in Study 2 with three exceptions. First, we reduced the number of images to 16 to reduce participant burden. We selected the images that were most difficult in our previous two studies to retain, so that there would be the highest level of uncertainty present to drive signal salience and interpretation (Drover, Wood, and Corbett 2018). Second, we included an additional signal sent by a human colleague, Sam Smith, for every image pair. With this addition, we were able to manipulate the congruence of the signal set composed of human and AI team member signals. The study was balanced, so that half of the signal sets were congruent, where the human and the AI both signaled the same decision. In the other half, the signal from the AI was the opposite of the signal from Sam Smith. Finally, we gave the facial recognition system tool the name Iris AI to match the presentation of the colleague Sam Smith in our decision-making task. A total of 319 participants followed the link to participate in the study. We removed 20 participants who failed to provide consent or failed to recall basic information after the tutorial (process checks). This resulted in 299 individuals interested who were allowed to proceed to the full experiment and were paid 2.90 pounds in exchange for their time. These participants were on average 43.26 years old (SD 12.82). Approximately 47.8% of our participants identified as female, 50.2% of participants identified as male, 1.7% identified as transgender, and 0.3% identified as nonconforming. Approximately 49.2% of participants identified as Black/Caribbean/African/Afro Latin and 49.8% identified as White/Caucasian/European.

4.7.1.1 Signal Set Congruence

In the congruent signal set condition (coded as 1), participants were presented with a pair of images next to an employee badge as well as the decision made by Iris AI and Sam Smith, where both signals were the same (see Figure 3). In the incongruent signal set conditions (coded as 0), participants were shown Iris AI's decision (match or no match) plus Sam Smith's decision (match or no match) but the signal sent by Sam Smith and Iris AI was the opposite (see Figure 4).

4.7.2 Measures

The same focal measures that were used in Study 2 were used in Study 3 except where detailed below. The internal consistencies of our measures were adequate (α Trust in AI = 0.94; α Explicit Gender Bias = 0.91; α Explicit Racial Bias = 0.78).

4.7.2.1 Algorithm Aversion

In our review of the literature, it was clear the research on algorithmic aversion relies heavily on experimental results (Jussupow, Benbasat, and Heinzl 2024; Mahmud et al. 2022). Although our previous studies captured the trust participants had in AI systems, to our knowledge, a comprehensive measure of the underlying dimensions of algorithm aversion does not exist. To operationalize this construct, we developed a scale to establish construct validity and psychometric soundness. After conducting a review of the literature, the authors first generated a list of 60 content-valid items capturing four dimensions of algorithm aversion through an iterative process of prompt engineering using ChatGPT prompts (Bail 2024; Götz et al. 2023). These dimensions were Fear of Inaccuracy, Lack of Trust, Fear of Loss of Control, and Resistance to Change (15 items for each dimension). Participants were asked to complete all 60 items at the end of the survey. On analyzing these data using exploratory factor analysis, five factors had eigenvalues greater than 1 and were retained for rotation. Factors were rotated using an oblique rotation allowing factors to correlate. Using the cutoff criteria of 0.4 for item loading, after rotation, the fifth factor consisted mostly of cross loaded items that were ultimately dropped from the scale, resulting in a final scale of 45 items across four factors. Table 7 shows the items and factor loadings for our new measure. The internal consistency of this measure and its subdimensions was adequate (α Algorithmic Aversion = 0.98; α Fear of Inaccuracy = 0.96, α Lack of Trust = 0.92, α Fear of Loss of Control = 89, and α Resistance to Change = 0.96).

| Item | Fear of inaccuracy | Resistance to change | Loss of control | Lack of trust | |

|---|---|---|---|---|---|

| 1 | I am concerned about the reliability of algorithms. | 0.648 | |||

| 2 | Algorithms could make errors that would negatively impact my life. | 0.7582 | |||

| 3 | I feel uncertain about the dependability of algorithm-based decisions. | 0.6559 | |||

| 4 | The potential for inaccuracies in algorithmic results worries me. | 0.7461 | |||

| 5 | I question the correctness of outcomes produced by algorithms. | 0.7262 | |||

| 6 | Algorithms might not handle unexpected situations well. | 0.7465 | |||

| 7 | I believe that algorithms can easily misinterpret complex data. | 0.737 | |||

| 8 | The risk of error in algorithmic decisions is too high for comfort. | 0.6523 | |||

| 9 | Algorithms may not always provide consistent results. | 0.6921 | |||

| 10 | I am skeptical about the accuracy of algorithms when they process large amounts of data. | 0.6862 | |||

| 11 | The thought of relying solely on algorithmic accuracy makes me uneasy. | 0.6815 | |||

| 12 | Algorithms are not infallible and this can lead to serious mistakes. | 0.6819 | |||

| 13 | I distrust algorithms when the stakes of the decision are high. | 0.6219 | |||

| 14 | Algorithms could generate incorrect results without any warning signs. | 0.7461 | |||

| 15 | I am wary of using algorithms for decisions that have long-term consequences. | 0.6519 | |||

| 16 | I do not trust algorithms to understand my individual needs. | 0.4031 | |||

| 17 | Algorithms lack the human insight necessary for many decisions. | 0.4067 | |||

| 18 | I find it hard to trust a process I cannot easily understand or see. | 0.6 | |||

| 19 | I am not confident in algorithms to handle tasks that require emotional sensitivity. | 0.4881 | |||

| 20 | The impersonal nature of algorithms makes them hard to trust. | 0.6629 | |||

| 21 | I feel that algorithms do not have the capability to adapt to new or evolving situations. | 0.5061 | |||

| 22 | Algorithms are not transparent enough for me to trust their decisions. | 0.5947 | |||

| 23 | I worry about biases embedded in algorithms affecting their judgments. | 0.4047 | 0.4473 | ||

| 24 | Trusting algorithms with personal information makes me uncomfortable. | 0.4584 | |||

| 25 | I am skeptical about the fairness of algorithmic decisions. | 0.5247 | |||

| 26 | Algorithms do not provide the rationale for their decisions, which diminishes my trust. | 0.4482 | |||

| 27 | I am concerned that algorithms prioritize efficiency over ethical considerations. | ||||

| 28 | Algorithms cannot be held accountable in the same way humans can. | ||||

| 29 | I distrust algorithms because I cannot negotiate or reason with them. | ||||

| 30 | The idea of algorithms making decisions without human oversight is troubling to me. | ||||

| 31 | Relying on algorithms makes me feel like I am losing control over decisions. | 0.5915 | |||

| 32 | I prefer to keep important decision-making processes directly under human control. | ||||

| 33 | Algorithms taking over tasks leave me feeling helpless. | 0.6165 | |||

| 34 | I am uneasy about delegating critical decisions to algorithms. | ||||

| 35 | I believe that excessive use of algorithms can erode personal autonomy. | ||||

| 36 | Algorithms decide too much, too quickly, reducing my control. | 0.6547 | |||

| 37 | I am concerned about becoming overly dependent on algorithms. | 0.6418 | |||

| 38 | Using algorithms feels like putting my fate in the hands of a machine. | 0.5138 | |||

| 39 | I prefer decisions made by people because it keeps control within human hands. | 0.438 | |||

| 40 | The use of algorithms in decision-making processes makes me feel disconnected. | 0.6046 | |||

| 41 | I think that algorithms can make choices that aren't in my best interest. | ||||

| 42 | Algorithms reduce the human touch in decisions that affect me. | ||||

| 43 | I worry about the consequences of errors when control is ceded to algorithms. | 0.4743 | |||

| 44 | I am reluctant to accept decisions made without human involvement. | ||||

| 45 | The shift toward algorithmic decision-making diminishes individual influence. | 0.4004 | |||

| 46 | I am hesitant to adopt new technologies that rely heavily on algorithms. | 0.6497 | |||

| 47 | I prefer traditional methods over algorithmic solutions. | 0.8141 | |||

| 48 | The pace of change toward more algorithm use is concerning. | 0.622 | |||

| 49 | I am skeptical about replacing human roles with algorithms. | 0.5336 | |||

| 50 | I prefer the way things were done before algorithms were involved. | 0.8686 | |||

| 51 | Rapid technological changes involving algorithms are unsettling. | 0.6641 | |||

| 52 | I resist changes that involve using algorithms in my daily activities. | 0.7925 | |||

| 53 | I believe that some things are better left unchanged, especially regarding algorithm use. | 0.6663 | |||

| 54 | The movement toward algorithm-driven processes is too fast for my comfort. | 0.7089 | |||

| 55 | I am cautious about the increasing reliance on algorithms in professional settings. | 0.6269 | |||

| 56 | I prefer to stick to methods that have proven successful over time rather than adopting new algorithmic techniques. | 0.8912 | |||

| 57 | I am wary of changes that could make algorithms central to my life or work. | 0.7064 | |||

| 58 | I am not ready to embrace the widespread use of algorithms. | 0.8122 | |||

| 59 | I find it difficult to accept the shift from human expertise to algorithmic control. | 0.8074 | |||

| 60 | The trend toward automating more with algorithms does not sit well with me. | 0.7914 |

- Note: Items that cross loaded on a 5th factor were not retained in the final version of the scale. Items with bold factor loadings were in the final measure.

4.7.3 Manipulation Checks

Like in Studies 1 and 2, χ2 tests revealed that participants accurately recalled whether they had the option to solicit advice (χ2 = 72.638, p < 0.000) and the signal fit (χ2 = 117.068, p < 0.000). Regressions also showed that only the variable capturing each experimental condition was a significant predictor of recalling information about that condition (p < 0.001 in each case). To check whether our congruent signal set manipulation impacted participants, we regressed the impact of signal set congruence on participant confidence in their final decision. Mixed effects regression showed the only manipulated variable predicting final decision confidence was signal set congruence (𝝲 = 8.449, p < 0.001). The main effects of signal fit (𝝲 = −0.734, p = 0.650) and optional advice (𝝲 = 1.309, p = 0.419) were not significant nor was the three-way interaction term between experimental manipulations (𝝲 = 0.718, p = 0.682).

4.8 Study 3 Results

Table 8 displays the means, standard deviations, and descriptive statistics of our Study 3 variables. For the third time, results show that for signal fit there was no significant effect on the likelihood of AI convergence (Odds ratio = 0.875, p = 0.267). Unlike in Studies 1 and 2, for optional AI advice, results including only main effects (Model 1) do not show a significant, negative effect on the likelihood of convergence when AI advice was optional versus when it was not optional (Odds ratio = 1.112, p = 0.378). Instead, the key driver of AI convergence is the congruence of the signal set within the team (Hypothesis 3). When the signals sent by Iris AI and Sam Smith were congruent, participants were much more likely to take the AI advice, and this effect was large (Odds ratio = 9.098, p < 0.001).

| Variable | M | SD | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | AI convergence | 0.680 | 0.460 | 1.000 | |||||||||||

| 2 | Weak signal fit | 0.480 | 0.500 | −0.021 | 1.000 | ||||||||||

| 3 | Optional AI advice | 0.530 | 0.490 | 0.019 | 0.032 | 1.000 | |||||||||

| 4 | Congruent signal set | 0.500 | 0.500 | 0.412 | 0.000 | 0.000 | 1.000 | ||||||||

| 5 | Decline to solicit | 0.980 | 0.290 | −0.153 | 0.001 | 0.307 | −0.009 | 1.000 | |||||||

| 6 | Initial decision confidence | 72.230 | 20.410 | −0.126 | 0.004 | −0.007 | −0.017 | 0.243 | 1.000 | ||||||

| 7 | Female | 47.800 | 0.500 | 0.003 | 0.022 | −0.141 | 0.000 | −0.017 | 0.025 | 1.000 | |||||

| 8 | Black participant | 0.490 | 0.500 | 0.008 | 0.023 | −0.116 | 0.000 | 0.009 | 0.139 | 0.009 | 1.000 | ||||

| 9 | Explicit racism | 2.650 | 1.320 | −0.030 | 0.033 | 0.004 | 0.000 | 0.052 | 0.049 | −0.302 | 0.085 | 1.000 | |||

| 10 | Explicit sexism | 3.200 | 1.450 | 0.010 | −0.024 | −0.096 | 0.000 | −0.005 | 0.055 | −0.284 | 0.010 | 0.550 | 1.000 | ||

| 11 | Trust in AI system | 3.650 | 0.790 | 0.084 | 0.030 | 0.061 | 0.000 | 0.062 | 0.155 | −0.058 | 0.150 | 0.158 | 0.124 | 1.000 | |

| 12 | Algorithmic aversion | 4.600 | 1.170 | −0.064 | −0.005 | 0.046 | 0.000 | −0.034 | −0.035 | 0.074 | −0.112 | 0.010 | −0.085 | −0.407 | 1.000 |

- Note: N Level 2 = 299; N Level 1 = 4784. All correlations above 0.025 in absolute value are significant at p < 0.05.

When one accounts for the choice to see the advice by both team members, Sam Smith and Iris AI, however, results again show the same pattern as the previous two studies. The impact of choosing not to see team members' advice decreases the likelihood that participants make the same decision as Iris AI (Odds ratio = 0.212, p < 0.001), while being in the optional AI condition showed a positive effect after accounting for this choice by participants (Odds ratio = 1.519, p < 0.001). Thus, Hypothesis 1 was not supported, and Hypothesis 2 was supported for the third time. Furthermore, Hypothesis 3 was supported.

4.8.1 Study 3 Supplementary Analysis

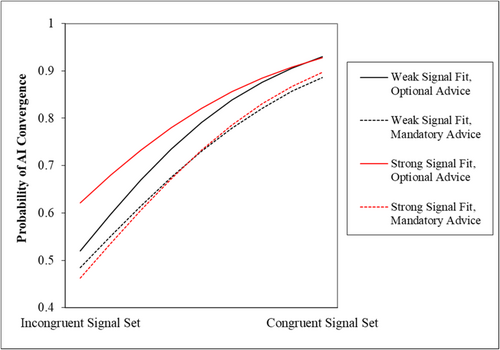

Although not hypothesized, to fully probe our experimental design, we tested the three-way interaction between signal fit, optional advice, and signal set congruence on the likelihood of AI convergence. This interaction term was significant (Odds ratio = 1.911, p = 0.043). As shown in Figure 5, the largest impact of our manipulated variables is caused by the signal set congruence. When the signals between Sam Smith and Iris AI are the same, participants are much more likely to take AI advice. Visual inspection suggests that this is slightly more likely when the signal is optional compared with when it is mandatory. When the signals do not align, however, there are greater differences across the optional advice and signal fit conditions. Specifically, when signal sets are not congruent, AI convergence is more likely when the signal fit is strong (when AI accuracy is not presented) and when seeing team members' advice is optional. Combined, this provides further support for Hypotheses 2 and 3 and, for the first time, suggests that signal fit may have a nuanced effect that is conditional on the presence of a human team member.

Similar to Studies 1 and 2, we controlled for participants' level of confidence and other variables that would lend support for our theorizing. Results of this analysis again show no qualitative difference in our tests of Hypotheses 1–3 when controlling for initial decision confidence, trust in AI, racial bias, and gender bias (see Tables 9 and 10). Furthermore, similar to Studies 1 and 2, participants' trust in AI significantly increased employees' AI advice taking in the team. Finally, unlike in Studies 1 and 2, explicit racism significantly decreased the likelihood that participants would take AI advice.