Psychometric Properties of MyCog 2.0: A Human-Centered Cognitive Screening Tool for Older Adults

Funding: This work was supported by National Institutes of Health (1R01AG074245-01).

ABSTRACT

Objectives

Self-administered, user-friendly apps that can detect initial symptoms of cognitive impairment have enormous potential to improve early detection of cognitive decline. We examine the psychometric properties of the redesigned version of MyCog, MyCog 2.0, an app-based tool for older adults that assesses executive function and episodic memory. MyCog 2.0 aims to improve usability while maintaining the psychometric validity demonstrated in the original version.

Methods

Feedback from clinicians and patients on MyCog was gathered to inform the human-centered design improvements of MyCog 2.0. To assess the psychometric properties of the improved tool, data from a community sample (n = 200; mean age = 73 years) who had completed MyCog 2.0 were compared to an age-matched sample who had completed the original MyCog. Internal consistency and construct validity were evaluated via confirmatory factor analysis. Bayesian differential item functioning was employed to evaluate the evidence for equivalence of MyCog and MyCog 2.0.

Results

Internal consistency was high for executive function and episodic memory tests (ωt = 0.84). A two-factor model showed excellent fit, demonstrating that tests measured two related yet distinct constructs, episodic memory and executive functioning, as expected. Differential item functioning between the two test versions was not observed for episodic memory performance or executive functioning accuracy; however, response time on five executive function items was found to differ across versions.

Conclusions

Findings support MyCog 2.0 as the first reliable self-administered cognitive screener designed specifically for ease of use among older adults. Findings support the internal consistency and construct validity of MyCog 2.0 and provide a foundation for the forthcoming clinical validation studies.

1 Introduction

Self-administered, app-based screeners that can detect initial symptoms of cognitive impairment in older adults have enormous potential to increase screening rates and improve early detection of pathological cognitive decline (Brayne et al. 2007). To this end, the NIH funded MyCog (L. Curtis et al. 2020), a tablet app that can be self-administered by older adults during the rooming process of a primary care visit. In preliminary studies, the original version of MyCog has demonstrated sensitivity of 79% and specificity of 82% to detect impairment in adults over age 65 (L. M. Curtis et al. 2023). MyCog has been incorporated in three large-scale NIH-funded grants to date (5UH3NS105562, U01NS105562, and R01AG069762), and is currently used by several health systems around the country, including Oak Street Health, Access Community Health, and Northwestern Medicine.

Broad adoption of MyCog in clinical settings has yielded substantial amounts of constructive feedback on the usability of the app for patients, clinicians, and clinic staff. Based on this feedback, we made several design changes to the MyCog user experience, resulting in an improved version of the app, MyCog 2.0. The goal of these updates was to improve usability (the ease with which users can interact with an app to achieve a goal) without impacting test validity. That is, we aimed to make the app as easy to use as possible, without changing properties of the test that could interfere with its ability to detect cognitive impairment. As such, the present study aimed to answer the following research questions: (1) What is the evidence of internal consistency of MyCog 2.0? (2) What is the evidence of construct validity of MyCog 2.0 when compared to gold standard neuropsychological tests of the same target constructs? (3) What is the evidence of psychometric equivalence between the original MyCog and MyCog 2.0?

2 Method

2.1 Sample

Data were collected from studies using the respective versions of the MyCog app conducted by the same market research agency. All participants were adults who were recruited from the general population; inclusion criteria required that participants were over the age of 65, spoke English, and provided informed consent (Table 1). The original MyCog study was conducted in parallel with a large-scale norming study of the NIH Toolbox Cognitive Battery (NIHTB-CB). The MyCog 2.0 data were collected in parallel with the validation of its smartphone counterpart, MyCog Mobile (Young, Dworak, et al. 2024; Young, McManus Dworak, et al. 2024). Participants were not screened for impairment prior to participation in either study.

| Original MyCog | MyCog 2.0 | |

|---|---|---|

| Validation study | Validation study | |

| Total sample N | 99 | 200 |

| Mean age (SD) [min, max] | 74.39 (6.81) [62, 89] | 72.56, (5.11) [65, 87] |

| n (%) | ||

|---|---|---|

| Gender | ||

| Female | 63 (63.6) | 109 (45.5) |

| Male | 36 (36.4) | 91 (54.5) |

| Racial identity | ||

| Asian | 0 (0) | 1 (0.5) |

| Black or African American | 6 (6.1) | 35 (17.5) |

| Middle Eastern or North African | 0 (0) | 1 (0.5) |

| Native Hawaiian or Pacific Islander | 0 (0) | 1 (0.5) |

| White | 93 (93.9) | 157 (78.5) |

| Other identity | 0 (0) | 8 (4) |

| Prefer not to answer | 0 (0) | 1 (0.5) |

| Ethnic identity | ||

| Hispanic | 2 (2.0) | 40 (20) |

| Not Hispanic | 97 (97.9) | 160 (80) |

| Education | ||

| Less than high school | 1 (1.0) | 1 (0.5) |

| High school diploma or GED | 43 (43.4) | 38 (19) |

| Some college or 2-year degree | 22 (22.2) | 65 (32.5) |

| 4-year college degree | 17 (17.2) | 61 (30.5) |

| Graduate degree | 16 (16.2) | 35 (17.5) |

2.2 Procedures

For both studies, participants were invited into the lab and requested to complete the self-administered MyCog as part of a larger neuropsychological battery. Data collection for the original MyCog was conducted in June through September of 2021, and for MyCog 2.0 in May of 2024.

2.3 Measures

2.3.1 MyCog

The original MyCog includes two performance-based tests that were adapted from the NIH Toolbox for Assessment of Neurological and Behavioral Function (NIHTB; L. Curtis et al. 2020): Dimensional Change Card Sorting (DCCS; Zelazo 2006) and Picture Sequence Memory (PSM; Dikmen et al. 2014). In the original version, participants are shown an animated demonstration video before each task that depicts a hand selecting the correct responses. The demonstration video takes approximately 15 s to view. Participants then complete 10 (5 color, 5 shape) practice trials with feedback.

DCCS (Dimensional Change Card Sorting) measures cognitive flexibility by asking participants to sort images by color and shape as quickly as possible. Each trial presents two reference images at the bottom of the screen, differing by both color and shape, while a target image appears in the center. Participants must match the target to one reference image based on either color or shape. The task consists of five blocks: (1) two demonstration trials matching by shape, (2) five practice trials matching by shape, (3) two demonstration trials matching by color, (4) five practice trials matching by color, and (5) 30 mixed trials requiring matching by either dimension in a pseudo-random order. Response times (RTs) are defined as the number of seconds between image presentation and response, with outliers defined as < 100 ms and > 9 s or three times the median absolute deviation (MAD) from each participant's median RT (Leys et al. 2013). Total testing time is approximately 3 min. The primary score is a combined speed-accuracy score calculated using the rate-correct score (RCS; Woltz and Was 2006), which is defined as the sum of correct trials divided by the total reaction time.

The PSM (Picture Sequence Memory) task measures episodic memory by requiring respondents to memorize a sequence of images. It begins with a 10-s animated video demonstrating how to drag pictures across the screen to arrange them. Participants can practice dragging pictures across the screen before beginning the practice trial. The demonstration is followed by a practice Trial t during which four images related to “camping” (each with a brief audio description) are presented in a particular sequence. The sequence is then scrambled, and participants are asked to reorder the images to fit the original sequence. The live trial is a 12-image sequence with no inherent order and depicts identifiable activities related to “a day with a friend.” The images are presented in a sequence and then scrambled, and participants are asked to re-arrange the images in the original order. The primary score is the cumulative number of correct placements.

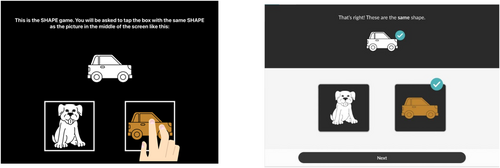

2.3.2 MyCog 2.0

The upgraded version of MyCog contains the same subtests as the original version, but changes aspects of the user experience. Most notably, the animated demonstration videos were removed and replaced with brief, simplified instructions followed by interactive practice trials with feedback. In contrast to the original version, participants using MyCog 2.0 are required to pass the practice trials in order to advance to the live items. Additionally, new timeout rules for inactive participants have been instituted; 1 min of inactivity triggers a pop-up with the options “Resume” or “Quit.” After another minute of inactivity, the test ends. These changes have been implemented to save time and ensure participant attention and understanding of the measures (Figure 1). These adjustments are expected to reduce the average MyCog assessment time in clinics. Additionally, the design of the assessment has been modified to be more user friendly and cohesive, as determined by a user interface/user experience (UI/UX) designer familiar with the intended patient user population. These changes include a larger text size, contrasting color themes, simplified visuals, and a lowered reading level for all text (Figure 2). Lastly, an introduction screen has been included, in order to set expectations about the nature and flow of the screener. The introduction screen emphasizes that users will participate in a routine screening test that includes two activities, sets the expectation that the tasks are challenging, and asks participants to give their best effort.

Original MyCog (left) animated demonstration versus MyCog 2.0 (right) interactive practice.

Original MyCog (left) UI/UX design versus MyCog 2.0 updated design.

2.3.3 Mini-Cog

The Mini-Cog is a brief and widely used cognitive screening tool (Borson et al. 2000). It consists of two components: a three-word recall task (TWR) and a clock drawing task. In the recall task, which assesses short-term memory, individuals are presented with three unrelated words and asked to remember and later recall them. In the clock drawing task, which evaluates visuospatial and executive functioning, participants are requested to draw a clock face to a specified time. The clock drawing task is slotted in the delay of the three-word learning and recall trials. We used the TWR score as a point of convergent validity for the MyCog 2.0 PSM subtest.

2.3.4 Verbal Paired Associates Test

The Verbal Paired Associates (VPA) Test is a subtest of the Wechsler Memory Scale–Fourth Edition (WMS-IV; Wechsler 2009). The examiner presents a list of 14 randomly paired words, and the participant must recall the associated word when given the first word of each pair. This process is repeated over four trials, with feedback provided after each item. After a 20-min interval, participants are asked to recall the paired word without any performance feedback. We used raw accuracy scores as a point of convergent validity for the MyCog 2.0 PSM subtest.

2.3.5 Color-Word Interference Test

The Color-Word Interference (CWI) Test, a subtest of the Delis-Kaplan Executive Functioning System (D-KEFS; Delis et al. 2001), evaluates inhibitory control and task-switching ability. In this task, participants are shown a series of color words (e.g., “red,” “blue,” “green”) printed in different font colors that do not match the word itself. Participants must quickly and accurately name the font color while ignoring the word's meaning. This exercise assesses the ability to both suppress the automatic response of reading the word and also switch between rules (i.e., naming the font color instead of reading the word). As a point of convergent validity for the MyCog 2.0 DCCS subset, we used the Inhibition Switching condition (in which participants refrain from reading the word unless it appears in a box, prompting them to switch to reading), utilizing a standardized score derived from completion time and errors.

2.3.6 Trail Making Test

The D-KEFS Trail Making Test (TMT; Delis et al. 2001) is a subtest of the D-KEFS that assesses executive functions such as attention and cognitive flexibility. It includes five conditions: Visual Scanning, Number Sequencing, Letter Sequencing, Number-Letter Switching, and Motor Speed. As a point of convergent validity for the MyCog 2.0 DCCS subtest, we used the Number-Letter Switching condition, as it evaluates cognitive flexibility and is closely related to the task-switching assessed in the Color Word Interference and DCCS tasks. We used a standardized score based on errors and completion time.

2.4 Analysis

All analyses were conducted in R statistical software (R Core Team 2023). Internal consistency was assessed using distinct methods which aligned with the paradigm of each task. For DCCS, we calculated median Spearman–Brown correlations between bootstrapped random split-half coefficients for the rate correct score (sum of correct trials/total time). Given that correct or incorrect item placement influences the ability to place future items correctly, the structure of the PSM inherently violates the “independence of response” assumption required by many reliability metrics in classical test theory. As such, reliability was estimated using McDonald's ωt, which is relatively robust against such assumptions and uses a factor analytic approach (Stensen and Lydersen 2022). McDonald's ωt was calculated from a one factor structure with each of the 12 image tile placements scored dichotomously (correctly or incorrectly placed) as an indicator. We allowed the errors of the first three items to correlate to account for primacy bias (Detterman and Ellis 1970), and we used a weighted least squares mean and variance (WLSMV) estimator to account for the dichotomous items (Brown 2015).

To evaluate construct validity, we used a confirmatory factor analysis (CFA) approach with maximum likelihood estimation. CFA allows us to test if observed measures (e.g., MyCog subtests) load onto the hypothesized latent factors that align with gold-standard neuropsychological constructs and also accounts for measurement error better than observed correlations. By estimating the strength of relationships between these observed variables and their latent constructs, CFA helps establish whether measures intended to assess the same construct are indeed closely related; analysis intends to support both convergent and construct validity. Empirical studies have demonstrated that confirmatory factor methods, despite requiring larger samples than direct correlations, provide a more comprehensive analysis of construct validity by testing entire measurement models and accounting for measurement error (Brown 2015). For instance, statistical evidence from model comparison tests showing a two-factor model fits significantly better than a one-factor model provides stronger support of distinct but related constructs within a test battery better than individual correlation comparisons (Rönkkö and Cho 2022).

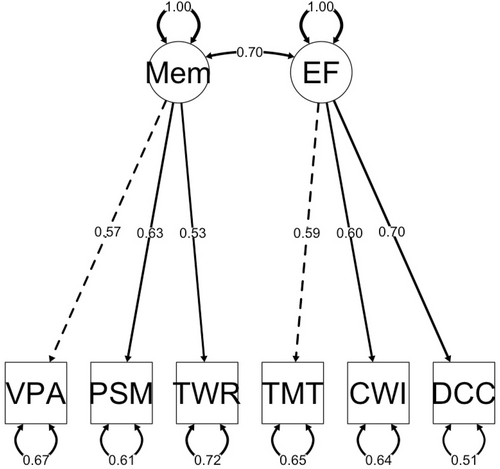

We hypothesized a two-factor structure with (1) DCCS loading onto an executive functioning factor along with the DKEFS Color Word Interference Inhibition Switching and Trail Making Number-Letter Switching conditions, and (2) PSM loading onto an episodic memory factor along with WMS-IV Verbal Paired Associates and Mini-Cog Three Word Recall. We compared the hypothesized two factor model to a single factor model to evaluate how well the tests measure the distinct constructs.

To evaluate equivalence between the two versions of MyCog, we employed Bayesian differential item functioning (DIF) testing methods. Bayesian methods were selected as they can accommodate both dichotomous and continuous items as well as relatively smaller data sets (Sinharay 2013). For each model, four MCMC chains were run with 2000 iterations (500 used for warm-up) to ensure convergence. Convergence of the model was assessed using the R-hat statistic, with values below 1.1 considered acceptable. Bulk and tail effective sample sizes above 100 were considered reliable parameter estimates. For the dichotomous items (i.e., the 12 PSM items and correct/incorrect score on the 30 DCCS items), we fit a Bayesian logistic regression model, where the log-odds of responding correctly to each item were modeled as a function of the test version. We only tested for uniform DIF to minimize computational burden and increase likelihood of convergence in our relatively small sample. A naive normal prior distribution with a mean of 0 and a standard deviation of 1 was specified for the regression coefficients. Given the continuous, positively skewed nature of the response time data, a Gamma distribution with a log link function was used. A normal prior distribution of N (0, 5) was used for the regression coefficients of the response time variables. Items were flagged for DIF if the 95% credible interval of the effect did not include zero, indicating significant differences in response times between the test versions.

3 Results

3.1 Internal Consistency

The Spearman–Brown split-half reliability median coefficient for DCCS indicated high internal consistency (0.92 at the 50th percentile), with upper and lower percentile values indicating stability across different test halves (0.89 at the 2.5th percentile and 0.94 at the 97.5th percentile). For the one-factor PSM model, the chi-square test of model fit was significant, χ2(51) = 93.48, p < 0.001, indicating some misfit between the model and the observed data. However, other fit statistics less sensitive to sample size suggested moderate to good fit (CFI = 0.96; TLI = 0.95; RMSEA = 0.07, 90% CI [0.044, 0.087]; SRMR = 0.11). The ωt of 0.84 suggested a unidimensional model with strong internal consistency.

3.2 Construct Validity

The hypothesized two-factor model showed excellent fit to the data, χ2(8) = 6.73, p = 0.566, CFI = 1.000, TLI = 1.014, RMSEA = 0.000 (90% CI [0.000, 0.077], p = 0.813), SRMR = 0.030; see Figure 3. The two-factor model fit the data significantly better than a one-factor model (Δχ2(1) = 12.86, p < 0.001; ΔAIC = 10.8; ΔBIC = 7.6), suggesting the indicators measured the distinct constructs well.

Two-factor model of MyCog 2.0. CWI = DKEFS Color Word Interference Inhibition Switching Condition; DCC = MyCog 2.0 Dimensional Change Card Sorting; EF = executive functioning; Mem = episodic memory; PSM = MyCog 2.0 Picture Sequence Memory; TMT = DKEFS Trail Making Test Number-Letter Switching Condition; TWR = Mini-Cog Three-Word Recall; VPA = WMS-IV Verbal Paired Associates.

3.3 Differential Item Functioning

All models demonstrated adequate convergence as evidenced by R-hat < 1.1 and Bulk and Tail effective sample sizes well above 100. None of the dichotomous items, including the 12 PSM and the 30 DCCS accuracy items, demonstrated significant DIF; however, response time on five of the DCCS items varied significantly based on test version. Response times were between 0.29 and 0.39 s faster on MyCog 2.0 for items 9, 14, 18, and 22, and 0.37 s slower on item 2 (Table 2). Out of the four items that were faster on MyCog 2.0, three required participants to switch to color matching from the previous item, which required shape matching. MyCog 2.0 demonstrated faster scores on one item that required shape matching following the previous item that also required shape matching (item 14), but slower scores on another item with the same conditions (item 2).

| Reaction time item | Condition | Estimate | Std. error | LCI | UCI |

|---|---|---|---|---|---|

| DCCS_2 | Shape, same | 0.37 | 0.18 | 0.01 | 0.69 |

| DCCS_9 | Color, switch | −0.34 | 0.12 | −0.58 | −0.13 |

| DCCS_14 | Shape, same | −0.34 | 0.15 | −0.64 | −0.05 |

| DCCS_18 | Color, switch | −0.39 | 0.11 | −0.61 | −0.18 |

| DCCS_22 | Color, switch | −0.29 | 0.12 | −0.53 | −0.06 |

4 Discussion

Findings suggest that MyCog 2.0, the newly updated version of MyCog, reliably measures episodic memory and executive functioning, as expected. The subtests demonstrated strong internal consistency (ωt = 0.84) and evidence of construct validity via a confirmatory factor analysis. Performance on the episodic memory test did not vary by test version, nor did accuracy on the executive functioning test. However, response time on five of the executive functioning items significantly differed by test version, with faster response times on four items in MyCog 2.0. Three of those items required the participants to switch from matching by shape to matching by color. Switching between tasks is known to be more cognitively taxing than repeating the same task, typically requiring more cognitive processing time (e.g., Cutini et al. 2021; Rogers and Monsell 1995). It is thus possible that the interactive practice on MyCog 2.0 better explains the task to participants, or that the abbreviated introduction reduces test fatigue, resulting in better attention on the live items. It is also possible that this finding is an artifact of the different samples for the MyCog and MyCog 2.0 validation studies, as we were unable to give both versions of the tests to the same participants. Regardless, further investigation on the reaction time items that demonstrated DIF is warranted. These findings are also limited by the lack of racial, ethnic, and educational diversity in the samples, particularly in the sample who completed the original version of MyCog. Although findings suggest construct validity of MyCog 2.0 and psychometric comparability with the original version, the clinical validity of the tool is still unknown. A clinical validation study in a sample of healthy and impaired adults will be necessary to determine MyCog 2.0's sensitivity and specificity in detecting cognitive impairment.

5 Conclusions

The results of this study support the psychometric properties of MyCog 2.0 as a reliable and valid self-administered cognitive screening tool for older adults. The tool demonstrated high internal consistency for both executive function and episodic memory subtests, as well as excellent construct validity, as indicated by a well-fitting two-factor model. Furthermore, there was no evidence of differential item functioning (DIF) between MyCog 2.0 and the original version, aside from minor variations in response times for some executive function items. These differences could be attributed to the improved user experience in MyCog 2.0, which may have enhanced user attention and task comprehension. While these findings suggest that MyCog 2.0 maintains the psychometric integrity of the original version, further research is needed to assess its clinical validity and sensitivity to detect cognitive impairment in both healthy and impaired populations. These results pave the way for future clinical validation studies and support MyCog 2.0 as an accessible and effective tool for early cognitive screening in older adults.

Author Contributions

Stephanie Ruth Young: conceptualization, writing – original draft, writing – review and editing, supervision, investigation, methodology, formal analysis. Yusuke Shono: conceptualization, writing – original draft, writing – review and editing, methodology, formal analysis. Katherina K. Hauner: writing – review and editing. Jiwon Kim: methodology, formal analysis, writing – review and editing. Elizabeth McManus Dworak: methodology, formal analysis, writing – review and editing. Greg Joseph Byrne: investigation, project administration. Callie Madison Jones: investigation, project administration. Julia Noelani Yoshino Benavente: investigation, project administration. Michael S. Wolf: conceptualization, supervision, methodology, funding acquisition. Cindy J. Nowinski: conceptualization, supervision, methodology, writing – review and editing, funding acquisition.

Conflicts of Interest

M.S.W. reports grants from the NIH, Gordon and Betty Moore Foundation, and Eli Lilly, and personal fees from Pfizer, Sanofi, Luto UK, University of Westminster, Lundbeck and GlaxoSmithKline outside the submitted work. All other authors report no conflicts of interest.

Open Research

Data Availability Statement

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.